You said semantic - are you a linguist?

It is actually quite doable to start using semantic technologies. Read this and figure out how it would happen in your organization.I have often faced a communication challenge – a person comes to me and asks “what is semantic technology?” Especially, when one linguist wanted to know how do I define semantics (in IT)? – I had to step back and do some home work on the topic. We IT guys are notorious to abuse any term from any domain whenever we need a yet another buzz word to mystify some basic concepts. So, this is what I came up as tried to explain to her what semantic technology is in nutshell.

In Linguistics

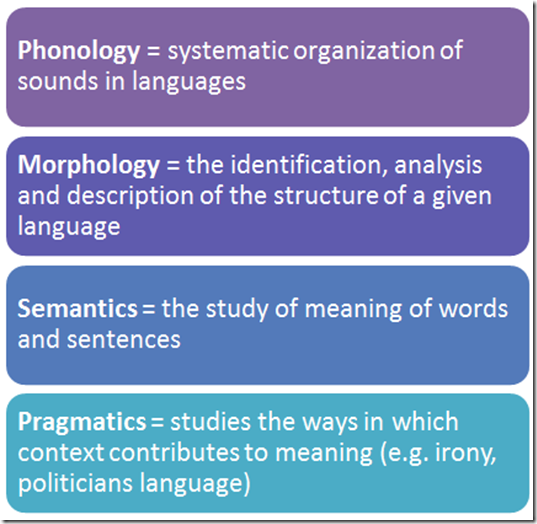

Let’s start from real science where semantics is defined and has its boundaries related to other core terms defining study of communication. In linguistics we can stack core research domains as follows:

We can probably say that semantics is somewhat well defined area of research in linguistics.

But my situation was soon getting worse. When explaining how semantic technology is often implemented in IT systems, I dropped yet another top-ten bullshit bingo word – ontology. She had done her masters in philology and demanded further explanation on what I meant by ontology – another topic to demystify.

In Philosophy

Ontology = is the philosophical study of the nature of being, becoming, existence, or reality.In Semantic technology (IT guys talk)

Ontology = formally represents knowledge as a set of concepts within a domain, and the relationships between those concepts.Semantics = encodes meanings separately from data and content files, and separately from application code (often utilize computational linguistics).

She was merciful and said – you IT guys have natural born ignorance for philosophy and no knowledge on centuries of real science but that is OK, keep on abusing fundamental terms – you got your reasons. I was pardoned, and continued my story.

Directed graph – a fundament of semtech

Directed graph is a set of nodes connected by edges, where the edges have a direction associated with them. These expressions are known as triples in RDF terminology: subject – predicate - object. The triple concept is better suited for certain knowledge representation than relational model.

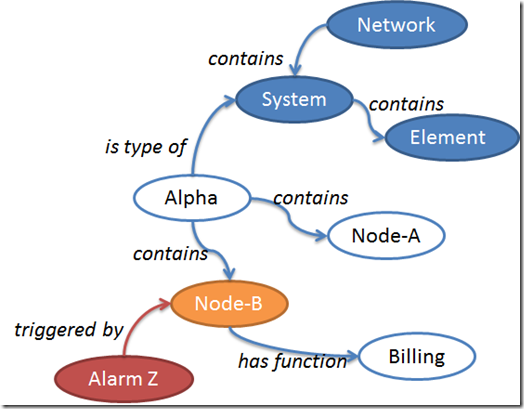

Creating ontology – concept of graph

When explaining the ontology powered information management concept, it is reasonable to use examples familiar for the audience. The following sample was crafted for a session with NSN architect team, i.e. it takes place in the telecom world. I dug out some statements on their one system product as stated on the NSN web site:- ASPEN, an industry-leading Advanced Service Platform for Ethernet Networks

- A-2200 is an access product often configured in ASPEN.

Populating the ontology

In this case, the audience understood the concept in a flash, but their were more suspicious on how the ontology could be populated from existing data sources. You might bother to create the conceptual data model by hand, but it does not make sense to handcraft the actual system data in ontology. They were absolutely right and luckily there are plenty of tools which will do it for you, such as Ontology-4 by Ontology Systems. Let’s not go to technology in this post. Some asked then how would you turn database data into RDF, can you retain the semantics?That was an excellent question. Semantics in and around the data are given and constant to start with. You cannot usually increase the level of semantics during the transformation – unless you bring in some content analytics and/or learn something from the context. I then draw an example of network data in database and how it is transformed to graphs.

1.Create access to DB

2.Semantic engine analyses DB tables

3.Engine stores data and context as graphs

You then get a graph something like this:

The interesting part is that should you have more related data in other systems, say Alarms triggered by a particular network element, you can relatively easily merge that data in to the ontology and see the impact of the isolated alarm data to the whole network. And the story goes on. As you merge also customer data from CRM you can see who are the impacted customers when Alarm Z hits The network.

With semantic technology all this is easier and a lot cheaper to do since:

- harmonized model across systems, organizations and data processes can be created “above the system space” without complex data integrations

- semantic engine comes with content analysis capabilities

- semantic engine comes with query capabilities, which enables fast and effective search style data integration

- semantic engine often provides an inferring tool for reasoning (what if –analysis etc.)

Fake pearls for pigs?

There are many kind of semantic tools available and some of them may give you a kick start to improve your content management. For example, you can improve the findability of information with auto tagging tool which adds keywords or suggests terms in order to categorize your documents or intra pages. Of course, the more advanced capabilities gives more but also demands more from the organization, systems and people. Two main challenges to implement and deploy the promises of semantic CM are:- The knowledge representation layer is hard to make reasonable. To make it reflecting the reality at sufficient level of details, but not too closely. The different applications - not to mention the people - have different expectations of what the data model means. And consequently, they interpret models differently. Although, for example, an ontology would be modeled carefully - it still is only suitable for a particular domain. A generalization leads to inaccuracies and misinterpretations. Learning system would bring some relief, but It is difficult to make computers to think like mere mortals.

- Organization trying to use advanced IM/CM technologies has often too low maturity (processes, level of people’s competences). Also its overall level of capabilities may not be adequate to hold new desired systems and culture(say, measured using EA dimensions: business-, information-, application- and technology architecture). Do not dream on knowledge integration if here is no common metadata model or data standards and most of the systems are developed to meet local needs.

W3C: Semantic Web: Data on the Web

You have probably seen this stack already and it is finally becoming a reality in advanced IM software products.

Machine-processable, global Web standards:

• Assigning unambiguous names (URI)

• Expressing data, including metadata (RDF)

• Capturing ontologies (OWL)

• Query, rules, transformations, deployment, application spaces, logic, proofs, trust.

Semantic web today

Key driving forces are:1. Linked Open Data as concept for “hyper data”

2. http://schema.org/ for SEO and

3. Programmable Web as a global API for cloud age.

Of course more to come. Google search has been using knowledge graphs for more than a year to bring up “things – not just strings” in the search results (thanks to Freebase integration).

Cheers, Heimo Hänninen, Talent Base

Ei kommentteja:

Lähetä kommentti